In the world of machine learning, we encounter several intriguing concepts: overfitting, underfitting, and bias. But did you know these terms have amusing parallels in our daily lives? Let’s dive into the whimsical world where machine learning meets the human mind 🧠.

Overfitting: The Overthinker’s Dilemma

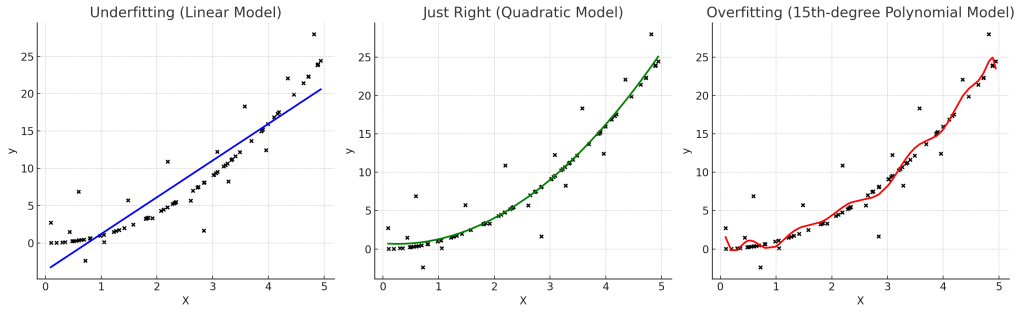

In machine learning, overfitting occurs when a model learns not just the underlying pattern but also the noise within the training data. This results in a complex model that performs poorly on new data. Imagine a plot where the boundary curve twists and turns excessively to fit every single data point, including the outliers (or noise).

Now, let’s translate this to human behavior. Picture an overthinker: someone who analyzes every possible scenario, including the ones that don’t really matter. Just as an overfitted model tries to fit even the noise, an overthinker considers every trivial detail, every passing thought, and every fleeting impression. Overthinkers create highly complex models of reality, filled with unnecessary details. They expend immense mental energy trying to predict every possible outcome, only to end up misinterpreting new experiences. Just as an overfitted model struggles with new data, overthinkers struggle to understand new situations and people, often seeing threats and complications where none exist. Sadly, we’ve built a society where overthinkers are hailed as geniuses with turbocharged brains, when in reality, they’re the ones struggling the most, wrestling with every trivial detail like it’s a life-or-death riddle.

Maybe you see someone who spends two hours drafting a three-sentence email, contemplating every word choice and punctuation mark, fearing the recipient might misinterpret a comma 😣. Well, that’s overthinking! You know that person, who spends the rest of the day, after posting a photo on instagram, analyzing each like and comment, wondering if a friend’s lack of response signals a secret grudge 😀, maybe it’s you!!

Underfitting: The No-Thinker’s Bliss

On the opposite end of the spectrum is underfitting. In machine learning, underfitting happens when a model is too simple to capture the underlying pattern of the data. It misses the essential complexity required to make accurate predictions. Imagine a plot where the boundary curve is just a straight line, completely ignoring the actual distribution of data points.

This is akin to the mindset of a “no-thinker” – someone who doesn’t care about anything happening around them. These individuals appear overly cool, seemingly unaffected by experiences, people, or societal duties. They miss the complexities of life, just as an underfitted model misses the essential details of the data. Imagine you’re at a wedding, and the best man (a notorious no-thinker) forgets the rings. While everyone panics, he’s chilling, sipping a mimosa, saying, “Relax, it’s all about the love, man.” Meanwhile, the bride looks like she’s about to launch him into orbit. Or consider a coworker who’s so cool that deadlines are merely “suggestions.” When asked about the missed deadline, they respond with, “What’s the rush? Time is a social construct, bro.”

The Perils of High Overthinking and High Underfitting

Meet the models that are the epitome of high overthinking and high underfitting – a disastrous combo that translates perfectly to certain people’s behavior. These models (and people) have all the theoretical knowledge from overanalyzing every tiny detail, but when it comes to taking action, they’re as effective as a chocolate teapot. These folks will spend hours dissecting and debating the minutiae of a situation, crafting elaborate plans that would make a NASA engineer proud. Yet, when it’s time to execute, they suddenly morph into the embodiment of ‘super-cool’ (cue sarcasm) indifference, convincing themselves that action isn’t needed. They perfectly miss the mark in every situation, armed with all the right answers but utterly failing to get anything done. It’s like having a top-notch GPS system but deciding to wing it and ignore every single direction.

Here’s a mathematical representations of under, over and ‘just-right’ fitted models:

The Just-Right Model: Balanced Thinking

The goal in machine learning is to strike a balance between underfitting and overfitting – to create a model that generalizes well to new data. Such a model doesn’t get bogged down by noise, nor does it oversimplify reality. Similarly, the ideal mindset balances overthinking and no-thinking. These individuals approach new experiences objectively, without the noisy baggage of the past or the indifference of the perpetually chill. They process information efficiently, understanding new situations and people as they are, rather than through a distorted lens.

Just as a well-tuned machine learning model performs best on unseen data, a balanced mindset handles new experiences most effectively. So, strive for that sweet spot. Don’t get tangled in the noise like an overthinker, nor miss the essence like a no-thinker. Embrace a balanced perspective, and you’ll navigate life’s complexities with finesse and clarity. So, let’s train our mental models wisely and enjoy the delightful dance between thought and reality!